CheckUrl is a REXX program that automatically checks if the URLs contained in a file are correct (i.e. they exists). It can be very helpful for checking dead urls, and for checking your Netscape bookmark file, or any other HTML file containing url links - also online pages. CheckUrl supports both HTTP and FTP URLs, and can use multiple connections to check more than one url simultaneously.

CheckUrl

Version:

1.6.2

Release date:

Tuesday, 25 April, 2000

License:

- Open source (generic)

Interface:

- VIO

Manual installation

Program is distributed as ZIP package: download to temporary directory and unpack to destination folder. See below for download link(s).

Following ones are the download links for manual installation:

| CheckUrl v. 1.6.2 (25/4/2000, Francesco Cipriani) | Readme/What's new |

CheckUrl v 1.6.2

written by Francesco Cipriani

# Description

CheckUrl is a REXX program that automatically checks if the URLs

contained in a file are correct (i.e. they exists)

It can be very helpful for checking dead urls, and for checking your

Netscape bookmark file, or any other HTML file containing url links -

also online pages.

CheckUrl supports both HTTP and FTP URLs, and can use multiple

connections to check more than one url simultaneously.

# Features

* Ftp and http url checking

* HTML file checking (Netscape bookmark or any other HTML file)

* Online URL checking

* Multiple connections to check many urls simultaneously

* Multi pass mode (checks x times an url if an error occurrs)

* Configurable timeout support when connecting or receiving data

* Plain text log and HTML + text report

# System requirements

CheckUrl needs two REXX dlls to work, Rxftp and Rxsock by IBM.

You can download them following the links on my www page at

http://village.flashnet.it/~rm03703/programs

Obviously you must have a tcpip stack running (2.0 and up)

# Configuration

Edit checkurl.cfg and change values to your needs.

The file contains keyword descriptions.

# Multiple connections

Using this feature (enabled by the /mconn parameter) CheckUrl can be

many times faster than using a single connection, both because there

is a better use of the bandwidth and because if some site is slow to

reply the other tasks continue to do their work.

To use multiple connections you must have host "localhost" configured

in your "hosts" file, usually located in c:\tcpip\etc\hosts. This is

necessary because CheckUrl child processes need to know the host to

connect to.

In the "hosts" file you should have the line

localhost 127.0.0.1

or any other ip address you are using as your loopback device.

You should also run "ifconfig lo 127.0.0.1" to set the ip address of

the loopback device before using checkurl.

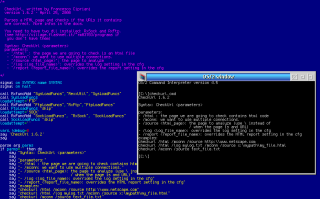

When using multiple connections Checkurl will display the status

of each connection and a progress bar at the top of the screen.

Each connection line is like:

C # [t] (s) url

where

# is the connection number

t is the try number

s are the seconds the connection is checking the url or is waiting to

have an url to check assigned

url is the URL being checked

# Multi pass mode

Sometimes it can happen that an url isn't available and that a few seconds

later it is, because some server may be down or there is some problem

reaching the host.

Now you can check x times an url during the same checkurl execution;

you can set the maximum number of retries in checkurl.cfg (maxtries keyword)

# Bad url file

Every time checkurl finishes its work, it writes badurl.lst file, which

contains a list of the URLs whose check reported an error (not a warning).

This can be useful to check those bad urls more times in different

days, to be sure they're really bad, and then delete them from your

source file.

For example, if you want to safely check you Netscape bookmark file,

you can execute

checkurl /mconn /html /source bookmark.htm

the first time, and then

checkurl /mconn /source badurl.lst

the next times, checking again the bad urls resulting from the previous

check.

# Parameters

- /html : the page we are going to check contains html code

- /mconn: we want to use multiple connections.

- /source <html_page>: the page to analyze.

IMPORTANT: use \ instead of / when the page is and url

(this is necessary due to the behaviour of the REXX interpreter)

- /log <text_log_file>: override the logfile setting in checkurl.cfg

specifying a different file

- /report <html_log_file>: override the htmllogfile setting in

checkurl.cfg specifying a different file

examples:

"checkurl /html /mconn /source http:\\www.netscape.com"

Checks the online url http://www.netscape.com, which

obviously is and HTML file, using multiple connections

"checkurl /html /mconn /source x:\mypath\my_file.html"

Checks the local file x:\mypath\my_file.html which is an

HTML file, using multiple connections

"checkurl /mconn /source text_file.txt"

Checks the file text_file.txt, which contains plain text and a url

on each line

# Disclaimer

This program is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

# Freeware

CheckUrl is released as freeware, so use it and distribute freely, but

please contact me if you are using it or if you find a bug.

# Todo

* Support for https (I still have to test it)

# The end

Francesco Cipriani - April 25, 2000

fc76@softhome.net

--------------------------------

CheckUrl 1.6.2 what's new

M modified

+ new

- deleted

1.6.2 + Added the /log <filename> and /report <filename> command line

options which allow to override the related settings (logfile

and htmllogfile) in checkurl.cfg.

M Fixed a bug in the command line parser

M Removed the /c parameter for detach when using multiple connections:

some users reported it was broken on their system

M Corrected a bug which caused URls on the same level not to be

parsed correctly |

www.hobbesarchive.com/Hobbes/pub/os2/apps/webbrowser/util/CheckUrl_1-6-2.zip www.hobbesarchive.com/Hobbes/pub/os2/apps/webbrowser/util/CheckUrl_1-6-2.zip |

local copy local copy

|

Record updated last time on: 26/10/2024 - 10:30

This work is licensed under a Creative Commons Attribution 4.0 International License.

Add new comment